mirror of

https://github.com/Azure/cosmos-explorer.git

synced 2025-12-23 10:51:30 +00:00

Compare commits

127 Commits

remove-ru-

...

fail-safe-

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

26210bcdc5 | ||

|

|

498c39c877 | ||

|

|

d62134d228 | ||

|

|

87e016f03c | ||

|

|

3a1841ad3c | ||

|

|

d314a20b81 | ||

|

|

7188e8d8c2 | ||

|

|

3cd2ec93f2 | ||

|

|

b8e9903287 | ||

|

|

4127d0f522 | ||

|

|

56b5a9861b | ||

|

|

10664162c7 | ||

|

|

cf01ffa957 | ||

|

|

3cc1945140 | ||

|

|

864d9393f2 | ||

|

|

8629bcbe2d | ||

|

|

6c90ef2e62 | ||

|

|

2d2d8b6efe | ||

|

|

7cbf7202b0 | ||

|

|

e8e5eb55cb | ||

|

|

f0c82a430b | ||

|

|

3777b6922e | ||

|

|

aec951694a | ||

|

|

07474b8271 | ||

|

|

e092e5140f | ||

|

|

1f4074f3e8 | ||

|

|

1ec0d9a0be | ||

|

|

eddc334cb5 | ||

|

|

22d8a7a1be | ||

|

|

4210e0752b | ||

|

|

b217d4be1b | ||

|

|

81fd442fad | ||

|

|

87f7dd2230 | ||

|

|

9926fd97a2 | ||

|

|

2a7546e0de | ||

|

|

4b442dd869 | ||

|

|

f0b4737313 | ||

|

|

8dc5ed590a | ||

|

|

afaa844d28 | ||

|

|

3e5a876ef2 | ||

|

|

51abf1560a | ||

|

|

1c0fed88c0 | ||

|

|

93cfd52e36 | ||

|

|

3fd014ddad | ||

|

|

3b6fda4fa5 | ||

|

|

db7c45c9b8 | ||

|

|

4f6b75fe79 | ||

|

|

5038a01079 | ||

|

|

e0063c76d9 | ||

|

|

9278654479 | ||

|

|

59113d7bbf | ||

|

|

88d8200c14 | ||

|

|

6aaddd9c60 | ||

|

|

f8ede0cc1e | ||

|

|

bddb288a89 | ||

|

|

a14d20a88e | ||

|

|

f1db1ed978 | ||

|

|

86a483c3a4 | ||

|

|

263262a040 | ||

|

|

bd4d8da065 | ||

|

|

59ec18cd9b | ||

|

|

49bf8c60db | ||

|

|

b0b973b21a | ||

|

|

3529e80f0d | ||

|

|

a298fd8389 | ||

|

|

1ecc467f60 | ||

|

|

b3cafe3468 | ||

|

|

4be53284b5 | ||

|

|

c1937ca464 | ||

|

|

2b2de7c645 | ||

|

|

8c40df0fa1 | ||

|

|

fcbc9474ea | ||

|

|

81f861af39 | ||

|

|

9afa29cdb6 | ||

|

|

9a1e8b2d87 | ||

|

|

babda4d9cb | ||

|

|

9d20a13dd4 | ||

|

|

3effbe6991 | ||

|

|

af53697ff4 | ||

|

|

b1ad80480e | ||

|

|

9247a6c4a2 | ||

|

|

767d46480e | ||

|

|

2d98c5d269 | ||

|

|

6627172a52 | ||

|

|

19fa5e17a5 | ||

|

|

a4a367a212 | ||

|

|

983c9201bb | ||

|

|

76d7f00a90 | ||

|

|

6490597736 | ||

|

|

229119e697 | ||

|

|

ceefd7c615 | ||

|

|

6e619175c6 | ||

|

|

08e8bf4bcf | ||

|

|

89dc0f394b | ||

|

|

30e0001b7f | ||

|

|

4a8f408112 | ||

|

|

e801364800 | ||

|

|

a55f2d0de9 | ||

|

|

d40b1aa9b5 | ||

|

|

cc63cdc1fd | ||

|

|

c3058ee5a9 | ||

|

|

b000631a0c | ||

|

|

e8f4c8f93c | ||

|

|

16bde97e47 | ||

|

|

6da43ee27b | ||

|

|

ebae484b8f | ||

|

|

dfb1b50621 | ||

|

|

f54e8eb692 | ||

|

|

ea39c1d092 | ||

|

|

c21f42159f | ||

|

|

31e4b49f11 | ||

|

|

40491ec9c5 | ||

|

|

e133df18dd | ||

|

|

0532ed26a2 | ||

|

|

fd60c9c15e | ||

|

|

04ab1f3918 | ||

|

|

b784ac0f86 | ||

|

|

28899f63d7 | ||

|

|

9cbf632577 | ||

|

|

17fd2185dc | ||

|

|

a93c8509cd | ||

|

|

5c93c11bd9 | ||

|

|

85d2378d3a | ||

|

|

84b6075ee8 | ||

|

|

d880723be9 | ||

|

|

4ce9dcc024 | ||

|

|

addcfedd5e |

@@ -3,7 +3,13 @@ PORTAL_RUNNER_PASSWORD=

|

||||

PORTAL_RUNNER_SUBSCRIPTION=

|

||||

PORTAL_RUNNER_RESOURCE_GROUP=

|

||||

PORTAL_RUNNER_DATABASE_ACCOUNT=

|

||||

PORTAL_RUNNER_DATABASE_ACCOUNT_KEY=

|

||||

PORTAL_RUNNER_MONGO_DATABASE_ACCOUNT=

|

||||

PORTAL_RUNNER_MONGO_DATABASE_ACCOUNT_KEY=

|

||||

PORTAL_RUNNER_CONNECTION_STRING=

|

||||

NOTEBOOKS_TEST_RUNNER_TENANT_ID=

|

||||

NOTEBOOKS_TEST_RUNNER_CLIENT_ID=

|

||||

NOTEBOOKS_TEST_RUNNER_CLIENT_SECRET=

|

||||

CASSANDRA_CONNECTION_STRING=

|

||||

MONGO_CONNECTION_STRING=

|

||||

TABLES_CONNECTION_STRING=

|

||||

|

||||

@@ -14,7 +14,6 @@ src/Common/DataAccessUtilityBase.ts

|

||||

src/Common/DeleteFeedback.ts

|

||||

src/Common/DocumentClientUtilityBase.ts

|

||||

src/Common/EditableUtility.ts

|

||||

src/Common/EnvironmentUtility.ts

|

||||

src/Common/HashMap.test.ts

|

||||

src/Common/HashMap.ts

|

||||

src/Common/HeadersUtility.test.ts

|

||||

@@ -43,7 +42,6 @@ src/Contracts/ViewModels.ts

|

||||

src/Controls/Heatmap/Heatmap.test.ts

|

||||

src/Controls/Heatmap/Heatmap.ts

|

||||

src/Controls/Heatmap/HeatmapDatatypes.ts

|

||||

src/Definitions/adal.d.ts

|

||||

src/Definitions/datatables.d.ts

|

||||

src/Definitions/gif.d.ts

|

||||

src/Definitions/globals.d.ts

|

||||

@@ -89,7 +87,7 @@ src/Explorer/DataSamples/ContainerSampleGenerator.test.ts

|

||||

src/Explorer/DataSamples/ContainerSampleGenerator.ts

|

||||

src/Explorer/DataSamples/DataSamplesUtil.test.ts

|

||||

src/Explorer/DataSamples/DataSamplesUtil.ts

|

||||

src/Explorer/Explorer.ts

|

||||

src/Explorer/Explorer.tsx

|

||||

src/Explorer/Graph/GraphExplorerComponent/ArraysByKeyCache.test.ts

|

||||

src/Explorer/Graph/GraphExplorerComponent/ArraysByKeyCache.ts

|

||||

src/Explorer/Graph/GraphExplorerComponent/D3ForceGraph.test.ts

|

||||

@@ -164,7 +162,7 @@ src/Explorer/Panes/Tables/Validators/EntityPropertyValidationCommon.ts

|

||||

src/Explorer/Panes/Tables/Validators/EntityPropertyValueValidator.ts

|

||||

src/Explorer/Panes/UploadFilePane.ts

|

||||

src/Explorer/Panes/UploadItemsPane.ts

|

||||

src/Explorer/SplashScreen/SplashScreenComponentAdapter.test.ts

|

||||

src/Explorer/SplashScreen/SplashScreen.test.ts

|

||||

src/Explorer/Tables/Constants.ts

|

||||

src/Explorer/Tables/DataTable/CacheBase.ts

|

||||

src/Explorer/Tables/DataTable/DataTableBindingManager.ts

|

||||

@@ -202,9 +200,6 @@ src/Explorer/Tabs/QueryTab.test.ts

|

||||

src/Explorer/Tabs/QueryTab.ts

|

||||

src/Explorer/Tabs/QueryTablesTab.ts

|

||||

src/Explorer/Tabs/ScriptTabBase.ts

|

||||

src/Explorer/Tabs/SettingsTab.test.ts

|

||||

src/Explorer/Tabs/SettingsTab.ts

|

||||

src/Explorer/Tabs/SparkMasterTab.ts

|

||||

src/Explorer/Tabs/StoredProcedureTab.ts

|

||||

src/Explorer/Tabs/TabComponents.ts

|

||||

src/Explorer/Tabs/TabsBase.ts

|

||||

@@ -245,9 +240,6 @@ src/Platform/Hosted/Authorization.ts

|

||||

src/Platform/Hosted/DataAccessUtility.ts

|

||||

src/Platform/Hosted/ExplorerFactory.ts

|

||||

src/Platform/Hosted/Helpers/ConnectionStringParser.test.ts

|

||||

src/Platform/Hosted/Helpers/ConnectionStringParser.ts

|

||||

src/Platform/Hosted/HostedUtils.test.ts

|

||||

src/Platform/Hosted/HostedUtils.ts

|

||||

src/Platform/Hosted/Main.ts

|

||||

src/Platform/Hosted/Maint.test.ts

|

||||

src/Platform/Hosted/NotificationsClient.ts

|

||||

@@ -290,8 +282,6 @@ src/Utils/DatabaseAccountUtils.ts

|

||||

src/Utils/JunoUtils.ts

|

||||

src/Utils/MessageValidation.ts

|

||||

src/Utils/NotebookConfigurationUtils.ts

|

||||

src/Utils/OfferUtils.test.ts

|

||||

src/Utils/OfferUtils.ts

|

||||

src/Utils/PricingUtils.test.ts

|

||||

src/Utils/QueryUtils.test.ts

|

||||

src/Utils/QueryUtils.ts

|

||||

@@ -386,8 +376,7 @@ src/Explorer/Notebook/temp/inputs/editor.tsx

|

||||

src/Explorer/Notebook/temp/markdown-cell.tsx

|

||||

src/Explorer/Notebook/temp/source.tsx

|

||||

src/Explorer/Notebook/temp/syntax-highlighter/index.tsx

|

||||

src/Explorer/SplashScreen/SplashScreenComponent.tsx

|

||||

src/Explorer/SplashScreen/SplashScreenComponentApdapter.tsx

|

||||

src/Explorer/SplashScreen/SplashScreen.tsx

|

||||

src/Explorer/Tabs/GalleryTab.tsx

|

||||

src/Explorer/Tabs/NotebookViewerTab.tsx

|

||||

src/Explorer/Tabs/TerminalTab.tsx

|

||||

@@ -396,19 +385,5 @@ src/Explorer/Tree/ResourceTreeAdapterForResourceToken.tsx

|

||||

src/GalleryViewer/Cards/GalleryCardComponent.tsx

|

||||

src/GalleryViewer/GalleryViewer.tsx

|

||||

src/GalleryViewer/GalleryViewerComponent.tsx

|

||||

cypress/integration/dataexplorer/CASSANDRA/addCollection.spec.ts

|

||||

cypress/integration/dataexplorer/GRAPH/addCollection.spec.ts

|

||||

cypress/integration/dataexplorer/ci-tests/addCollectionPane.spec.ts

|

||||

cypress/integration/dataexplorer/ci-tests/createDatabase.spec.ts

|

||||

cypress/integration/dataexplorer/ci-tests/deleteCollection.spec.ts

|

||||

cypress/integration/dataexplorer/ci-tests/deleteDatabase.spec.ts

|

||||

cypress/integration/dataexplorer/MONGO/addCollection.spec.ts

|

||||

cypress/integration/dataexplorer/MONGO/addCollectionAutopilot.spec.ts

|

||||

cypress/integration/dataexplorer/MONGO/addCollectionExistingDatabase.spec.ts

|

||||

cypress/integration/dataexplorer/MONGO/provisionDatabaseThroughput.spec.ts

|

||||

cypress/integration/dataexplorer/SQL/addCollection.spec.ts

|

||||

cypress/integration/dataexplorer/TABLE/addCollection.spec.ts

|

||||

cypress/integration/notebook/newNotebook.spec.ts

|

||||

cypress/integration/notebook/resourceTree.spec.ts

|

||||

__mocks__/monaco-editor.ts

|

||||

src/Explorer/Tree/ResourceTreeAdapterForResourceToken.test.tsx

|

||||

31

.eslintrc.js

31

.eslintrc.js

@@ -1,41 +1,39 @@

|

||||

module.exports = {

|

||||

env: {

|

||||

browser: true,

|

||||

es6: true

|

||||

es6: true,

|

||||

},

|

||||

plugins: ["@typescript-eslint", "no-null", "prefer-arrow"],

|

||||

extends: ["eslint:recommended", "plugin:@typescript-eslint/recommended"],

|

||||

globals: {

|

||||

Atomics: "readonly",

|

||||

SharedArrayBuffer: "readonly"

|

||||

SharedArrayBuffer: "readonly",

|

||||

},

|

||||

parser: "@typescript-eslint/parser",

|

||||

parserOptions: {

|

||||

ecmaFeatures: {

|

||||

jsx: true

|

||||

jsx: true,

|

||||

},

|

||||

ecmaVersion: 2018,

|

||||

sourceType: "module"

|

||||

sourceType: "module",

|

||||

},

|

||||

overrides: [

|

||||

{

|

||||

files: ["**/*.tsx"],

|

||||

env: {

|

||||

jest: true

|

||||

},

|

||||

extends: ["plugin:react/recommended"],

|

||||

plugins: ["react"]

|

||||

extends: ["plugin:react/recommended"], // TODO: Add react-hooks

|

||||

plugins: ["react"],

|

||||

},

|

||||

{

|

||||

files: ["**/*.{test,spec}.{ts,tsx}"],

|

||||

env: {

|

||||

jest: true

|

||||

jest: true,

|

||||

},

|

||||

extends: ["plugin:jest/recommended"],

|

||||

plugins: ["jest"]

|

||||

}

|

||||

plugins: ["jest"],

|

||||

},

|

||||

],

|

||||

rules: {

|

||||

"no-console": ["error", { allow: ["error", "warn", "dir"] }],

|

||||

curly: "error",

|

||||

"@typescript-eslint/no-unused-vars": "error",

|

||||

"@typescript-eslint/no-extraneous-class": "error",

|

||||

@@ -43,12 +41,13 @@ module.exports = {

|

||||

"@typescript-eslint/no-explicit-any": "error",

|

||||

"prefer-arrow/prefer-arrow-functions": ["error", { allowStandaloneDeclarations: true }],

|

||||

eqeqeq: "error",

|

||||

"react/display-name": "off",

|

||||

"no-restricted-syntax": [

|

||||

"error",

|

||||

{

|

||||

selector: "CallExpression[callee.object.name='JSON'][callee.property.name='stringify'] Identifier[name=/$err/]",

|

||||

message: "Do not use JSON.stringify(error). It will print '{}'"

|

||||

}

|

||||

]

|

||||

}

|

||||

message: "Do not use JSON.stringify(error). It will print '{}'",

|

||||

},

|

||||

],

|

||||

},

|

||||

};

|

||||

|

||||

119

.github/workflows/ci.yml

vendored

119

.github/workflows/ci.yml

vendored

@@ -9,6 +9,20 @@ on:

|

||||

branches:

|

||||

- master

|

||||

jobs:

|

||||

codemetrics:

|

||||

runs-on: ubuntu-latest

|

||||

name: "Log Code Metrics"

|

||||

if: github.ref == 'refs/heads/master'

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- name: Use Node.js 12.x

|

||||

uses: actions/setup-node@v1

|

||||

with:

|

||||

node-version: 12.x

|

||||

- run: npm ci

|

||||

- run: node utils/codeMetrics.js

|

||||

env:

|

||||

CODE_METRICS_APP_ID: ${{ secrets.CODE_METRICS_APP_ID }}

|

||||

compile:

|

||||

runs-on: ubuntu-latest

|

||||

name: "Compile TypeScript"

|

||||

@@ -79,32 +93,32 @@ jobs:

|

||||

name: dist

|

||||

path: dist/

|

||||

endtoendemulator:

|

||||

name: "End To End Tests | Emulator | SQL"

|

||||

name: "End To End Emulator Tests"

|

||||

needs: [lint, format, compile, unittest]

|

||||

runs-on: windows-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- uses: southpolesteve/cosmos-emulator-github-action@v1

|

||||

- name: Use Node.js 12.x

|

||||

uses: actions/setup-node@v1

|

||||

with:

|

||||

node-version: 12.x

|

||||

- name: Restore Cypress Binary Cache

|

||||

uses: actions/cache@v2

|

||||

with:

|

||||

path: ~/.cache/Cypress

|

||||

key: ${{ runner.os }}-cypress-binary-cache

|

||||

- uses: southpolesteve/cosmos-emulator-github-action@v1

|

||||

- name: End to End Tests

|

||||

run: |

|

||||

npm ci

|

||||

npm start &

|

||||

npm ci --prefix ./cypress

|

||||

npm run test:ci --prefix ./cypress -- --spec ./integration/dataexplorer/ci-tests/createDatabase.spec.ts

|

||||

npm run wait-for-server

|

||||

npx jest -c ./jest.config.e2e.js --detectOpenHandles test/sql/container.spec.ts

|

||||

shell: bash

|

||||

env:

|

||||

EMULATOR_ENDPOINT: https://0.0.0.0:8081/

|

||||

DATA_EXPLORER_ENDPOINT: "https://localhost:1234/explorer.html?platform=Emulator"

|

||||

PLATFORM: "Emulator"

|

||||

NODE_TLS_REJECT_UNAUTHORIZED: 0

|

||||

CYPRESS_CACHE_FOLDER: ~/.cache/Cypress

|

||||

- uses: actions/upload-artifact@v2

|

||||

if: failure()

|

||||

with:

|

||||

name: screenshots

|

||||

path: failed-*

|

||||

accessibility:

|

||||

name: "Accessibility | Hosted"

|

||||

needs: [lint, format, compile, unittest]

|

||||

@@ -123,43 +137,80 @@ jobs:

|

||||

sudo sysctl -p

|

||||

npm ci

|

||||

npm start &

|

||||

npx wait-on -i 5000 https-get://0.0.0.0:1234/

|

||||

npx wait-on -i 5000 https-get://0.0.0.0:1234/

|

||||

node utils/accesibilityCheck.js

|

||||

shell: bash

|

||||

env:

|

||||

NODE_TLS_REJECT_UNAUTHORIZED: 0

|

||||

endtoendpuppeteer:

|

||||

name: "End to end puppeteer tests"

|

||||

needs: [lint, format, compile, unittest]

|

||||

endtoendhosted:

|

||||

name: "End to End Tests"

|

||||

needs: [cleanupaccounts]

|

||||

runs-on: ubuntu-latest

|

||||

env:

|

||||

NODE_TLS_REJECT_UNAUTHORIZED: 0

|

||||

PORTAL_RUNNER_SUBSCRIPTION: ${{ secrets.PORTAL_RUNNER_SUBSCRIPTION }}

|

||||

PORTAL_RUNNER_RESOURCE_GROUP: ${{ secrets.PORTAL_RUNNER_RESOURCE_GROUP }}

|

||||

PORTAL_RUNNER_DATABASE_ACCOUNT: ${{ secrets.PORTAL_RUNNER_DATABASE_ACCOUNT }}

|

||||

PORTAL_RUNNER_DATABASE_ACCOUNT_KEY: ${{ secrets.PORTAL_RUNNER_DATABASE_ACCOUNT_KEY }}

|

||||

PORTAL_RUNNER_MONGO_DATABASE_ACCOUNT: ${{ secrets.PORTAL_RUNNER_MONGO_DATABASE_ACCOUNT }}

|

||||

PORTAL_RUNNER_MONGO_DATABASE_ACCOUNT_KEY: ${{ secrets.PORTAL_RUNNER_MONGO_DATABASE_ACCOUNT_KEY }}

|

||||

NOTEBOOKS_TEST_RUNNER_TENANT_ID: ${{ secrets.NOTEBOOKS_TEST_RUNNER_TENANT_ID }}

|

||||

NOTEBOOKS_TEST_RUNNER_CLIENT_ID: ${{ secrets.NOTEBOOKS_TEST_RUNNER_CLIENT_ID }}

|

||||

NOTEBOOKS_TEST_RUNNER_CLIENT_SECRET: ${{ secrets.NOTEBOOKS_TEST_RUNNER_CLIENT_SECRET }}

|

||||

PORTAL_RUNNER_CONNECTION_STRING: ${{ secrets.CONNECTION_STRING_SQL }}

|

||||

MONGO_CONNECTION_STRING: ${{ secrets.CONNECTION_STRING_MONGO }}

|

||||

CASSANDRA_CONNECTION_STRING: ${{ secrets.CONNECTION_STRING_CASSANDRA }}

|

||||

TABLES_CONNECTION_STRING: ${{ secrets.CONNECTION_STRING_TABLE }}

|

||||

DATA_EXPLORER_ENDPOINT: "https://localhost:1234/hostedExplorer.html"

|

||||

strategy:

|

||||

matrix:

|

||||

test-file:

|

||||

- ./test/cassandra/container.spec.ts

|

||||

- ./test/mongo/container.spec.ts

|

||||

- ./test/mongo/mongoIndexPolicy.spec.ts

|

||||

- ./test/mongo/openMongoAccount.spec.ts

|

||||

- ./test/notebooks/uploadAndOpenNotebook.spec.ts

|

||||

- ./test/selfServe/selfServeExample.spec.ts

|

||||

- ./test/sql/container.spec.ts

|

||||

- ./test/sql/resourceToken.spec.ts

|

||||

- ./test/tables/container.spec.ts

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- name: Use Node.js 12.x

|

||||

- name: Use Node.js 14.x

|

||||

uses: actions/setup-node@v1

|

||||

with:

|

||||

node-version: 12.x

|

||||

- name: End to End Puppeteer Tests

|

||||

run: |

|

||||

npm ci

|

||||

npm start &

|

||||

npm run wait-for-server

|

||||

npm run test:e2e

|

||||

node-version: 14.x

|

||||

- run: npm ci

|

||||

- run: npm start &

|

||||

- run: node utils/cleanupDBs.js

|

||||

- run: npm run wait-for-server

|

||||

- name: ${{ matrix['test-file'] }}

|

||||

run: npx jest -c ./jest.config.e2e.js --detectOpenHandles ${{ matrix['test-file'] }}

|

||||

shell: bash

|

||||

env:

|

||||

NODE_TLS_REJECT_UNAUTHORIZED: 0

|

||||

PORTAL_RUNNER_CONNECTION_STRING: ${{ secrets.CONNECTION_STRING_SQL }}

|

||||

MONGO_CONNECTION_STRING: ${{ secrets.CONNECTION_STRING_MONGO }}

|

||||

CASSANDRA_CONNECTION_STRING: ${{ secrets.CONNECTION_STRING_CASSANDRA }}

|

||||

TABLES_CONNECTION_STRING: ${{ secrets.CONNECTION_STRING_TABLE }}

|

||||

DATA_EXPLORER_ENDPOINT: "https://localhost:1234/hostedExplorer.html"

|

||||

- uses: actions/upload-artifact@v2

|

||||

if: failure()

|

||||

with:

|

||||

name: screenshots

|

||||

path: failed-*

|

||||

cleanupaccounts:

|

||||

name: "Cleanup Test Database Accounts"

|

||||

needs: [lint, format, compile, unittest]

|

||||

runs-on: ubuntu-latest

|

||||

env:

|

||||

NOTEBOOKS_TEST_RUNNER_CLIENT_ID: ${{ secrets.NOTEBOOKS_TEST_RUNNER_CLIENT_ID }}

|

||||

NOTEBOOKS_TEST_RUNNER_CLIENT_SECRET: ${{ secrets.NOTEBOOKS_TEST_RUNNER_CLIENT_SECRET }}

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- name: Use Node.js 14.x

|

||||

uses: actions/setup-node@v1

|

||||

with:

|

||||

node-version: 14.x

|

||||

- run: npm ci

|

||||

- run: node utils/cleanupDBs.js

|

||||

nuget:

|

||||

name: Publish Nuget

|

||||

if: github.ref == 'refs/heads/master' || contains(github.ref, 'hotfix/') || contains(github.ref, 'release/')

|

||||

needs: [lint, format, compile, build, unittest, endtoendemulator, endtoendpuppeteer]

|

||||

needs: [build]

|

||||

runs-on: ubuntu-latest

|

||||

env:

|

||||

NUGET_SOURCE: ${{ secrets.NUGET_SOURCE }}

|

||||

@@ -175,7 +226,7 @@ jobs:

|

||||

- run: cp ./configs/prod.json config.json

|

||||

- run: nuget sources add -Name "ADO" -Source "$NUGET_SOURCE" -UserName "GitHub" -Password "$AZURE_DEVOPS_PAT"

|

||||

- run: nuget pack -Version "2.0.0-github-${GITHUB_SHA}"

|

||||

- run: nuget push -Source "$NUGET_SOURCE" -ApiKey Az *.nupkg

|

||||

- run: nuget push -SkipDuplicate -Source "$NUGET_SOURCE" -ApiKey Az *.nupkg

|

||||

- uses: actions/upload-artifact@v2

|

||||

name: packages

|

||||

with:

|

||||

@@ -183,7 +234,7 @@ jobs:

|

||||

nugetmpac:

|

||||

name: Publish Nuget MPAC

|

||||

if: github.ref == 'refs/heads/master' || contains(github.ref, 'hotfix/') || contains(github.ref, 'release/')

|

||||

needs: [lint, format, compile, build, unittest, endtoendemulator, endtoendpuppeteer]

|

||||

needs: [build]

|

||||

runs-on: ubuntu-latest

|

||||

env:

|

||||

NUGET_SOURCE: ${{ secrets.NUGET_SOURCE }}

|

||||

@@ -200,7 +251,7 @@ jobs:

|

||||

- run: sed -i 's/Azure.Cosmos.DB.Data.Explorer/Azure.Cosmos.DB.Data.Explorer.MPAC/g' DataExplorer.nuspec

|

||||

- run: nuget sources add -Name "ADO" -Source "$NUGET_SOURCE" -UserName "GitHub" -Password "$AZURE_DEVOPS_PAT"

|

||||

- run: nuget pack -Version "2.0.0-github-${GITHUB_SHA}"

|

||||

- run: nuget push -Source "$NUGET_SOURCE" -ApiKey Az *.nupkg

|

||||

- run: nuget push -SkipDuplicate -Source "$NUGET_SOURCE" -ApiKey Az *.nupkg

|

||||

- uses: actions/upload-artifact@v2

|

||||

name: packages

|

||||

with:

|

||||

|

||||

25

.github/workflows/runners.yml

vendored

25

.github/workflows/runners.yml

vendored

@@ -1,25 +0,0 @@

|

||||

name: Runners

|

||||

on:

|

||||

schedule:

|

||||

- cron: "0 * 1 * *"

|

||||

jobs:

|

||||

sqlcreatecollection:

|

||||

runs-on: ubuntu-latest

|

||||

name: "SQL | Create Collection"

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- uses: actions/setup-node@v1

|

||||

- run: npm ci

|

||||

- run: npm run test:e2e

|

||||

env:

|

||||

PORTAL_RUNNER_APP_INSIGHTS_KEY: ${{ secrets.PORTAL_RUNNER_APP_INSIGHTS_KEY }}

|

||||

PORTAL_RUNNER_USERNAME: ${{ secrets.PORTAL_RUNNER_USERNAME }}

|

||||

PORTAL_RUNNER_PASSWORD: ${{ secrets.PORTAL_RUNNER_PASSWORD }}

|

||||

PORTAL_RUNNER_SUBSCRIPTION: 69e02f2d-f059-4409-9eac-97e8a276ae2c

|

||||

PORTAL_RUNNER_RESOURCE_GROUP: runners

|

||||

PORTAL_RUNNER_DATABASE_ACCOUNT: portal-sql-runner

|

||||

- uses: actions/upload-artifact@v2

|

||||

if: failure()

|

||||

with:

|

||||

name: screenshots

|

||||

path: failure.png

|

||||

3

.gitignore

vendored

3

.gitignore

vendored

@@ -9,9 +9,6 @@ pkg/DataExplorer/*

|

||||

test/out/*

|

||||

workers/**/*.js

|

||||

*.trx

|

||||

cypress/videos

|

||||

cypress/screenshots

|

||||

cypress/fixtures

|

||||

notebookapp/*

|

||||

Contracts/*

|

||||

.DS_Store

|

||||

|

||||

BIN

.vs/slnx.sqlite

Normal file

BIN

.vs/slnx.sqlite

Normal file

Binary file not shown.

194

CODING_GUIDELINES.md

Normal file

194

CODING_GUIDELINES.md

Normal file

@@ -0,0 +1,194 @@

|

||||

# Coding Guidelines and Recommendations

|

||||

|

||||

Cosmos Explorer has been under constant development for over 5 years. As a result, there are many different patterns and practices in the codebase. This document serves as a guide to how we write code and helps avoid propagating practices which are no longer preferred. Each requirement in this document is labeled and color-coded to show the relative importance. In order from highest to lowest importance:

|

||||

|

||||

✅ DO this. If you feel you need an exception, engage with the project owners _prior_ to implementation.

|

||||

|

||||

⛔️ DO NOT do this. If you feel you need an exception, engage with the project owners _prior_ to implementation.

|

||||

|

||||

☑️ YOU SHOULD strongly consider this but it is not a requirement. If not following this advice, please comment code with why and proactively begin a discussion as part of the PR process.

|

||||

|

||||

⚠️ YOU SHOULD NOT strongly consider not doing this. If not following this advice, please comment code with why and proactively begin a discussion as part of the PR process.

|

||||

|

||||

💭 YOU MAY consider this advice if appropriate to your situation. Other team members may comment on this as part of PR review, but there is no need to be proactive.

|

||||

|

||||

## Development Environment

|

||||

|

||||

☑️ YOU SHOULD

|

||||

|

||||

- Use VSCode and install the following extensions. This setup will catch most linting/formatting/type errors as you develop:

|

||||

- [Prettier](https://marketplace.visualstudio.com/items?itemName=esbenp.prettier-vscode)

|

||||

- [ESLint](https://marketplace.visualstudio.com/items?itemName=dbaeumer.vscode-eslint)

|

||||

|

||||

💭 YOU MAY

|

||||

|

||||

- Use the [GitHub CLI](https://cli.github.com/). It has helpful workflows for submitting PRs as well as for checking out other team member's PRs.

|

||||

- Use Windows, Linux (including WSL), or OSX. We have team members developing on all three environments.

|

||||

|

||||

✅ DO

|

||||

|

||||

- Maintain cross-platform compatibility when modifying any engineering or build systems

|

||||

|

||||

## Code Formatting

|

||||

|

||||

✅ DO

|

||||

|

||||

- Use [Prettier](https://prettier.io/) to format your code

|

||||

- This will occur automatically if using the recommended editor setup

|

||||

- `npm run format` will also format code

|

||||

|

||||

## Linting

|

||||

|

||||

✅ DO

|

||||

|

||||

- Use [ESLint](https://eslint.org/) to check for code errors.

|

||||

- This will occur automatically if using the recommended editor setup

|

||||

- `npm run lint` will also check for linting errors

|

||||

|

||||

💭 YOU MAY

|

||||

|

||||

- Consider adding new lint rules.

|

||||

- If you find yourself performing "nits" as part of PR review, consider adding a lint rule that will automatically catch the error in the future

|

||||

|

||||

⚠️ YOU SHOULD NOT

|

||||

|

||||

- Disable lint rules

|

||||

- Lint rules exist as guidance and to catch common mistakes

|

||||

- You will find places we disable specific lint rules however it should be exceptional.

|

||||

- If a rule does need to be disabled, prefer disabling a specific line instead of the entire file.

|

||||

|

||||

⛔️ DO NOT

|

||||

|

||||

- Add [TSLint](https://palantir.github.io/tslint/) rules

|

||||

- TSLint has been deprecated and is on track to be removed

|

||||

- Always prefer ESLint rules

|

||||

|

||||

## UI Components

|

||||

|

||||

☑️ YOU SHOULD

|

||||

|

||||

- Write new components using [React](https://reactjs.org/). We are actively migrating Cosmos Explorer off of [Knockout](https://knockoutjs.com/).

|

||||

- Use [Fluent](https://developer.microsoft.com/en-us/fluentui#/) components.

|

||||

- Fluent components are designed to be highly accessible and composable

|

||||

- Using Fluent allows us to build upon the work of the Fluent team and leads to a lower total cost of ownership for UI code

|

||||

|

||||

### React

|

||||

|

||||

☑️ YOU SHOULD

|

||||

|

||||

- Use pure functional components when no state is required

|

||||

|

||||

💭 YOU MAY

|

||||

|

||||

- Use functional (hooks) or class components

|

||||

- The project contains examples of both

|

||||

- Neither is strongly preferred at this time

|

||||

|

||||

⛔️ DO NOT

|

||||

|

||||

- Use inheritance for sharing component behavior.

|

||||

- React documentation covers this topic in detail https://reactjs.org/docs/composition-vs-inheritance.html

|

||||

- Suffix your file or component name with "Component"

|

||||

- Even though the code has examples of it, we are ending the practice.

|

||||

|

||||

## Libraries

|

||||

|

||||

⚠️ YOU SHOULD NOT

|

||||

|

||||

- Add new libraries to package.json.

|

||||

- Adding libraries may bring in code that explodes the bundled size or attempts to run NodeJS code in the browser

|

||||

- Consult with project owners for help with library selection if one is needed

|

||||

|

||||

⛔️ DO NOT

|

||||

|

||||

- Use underscore.js

|

||||

- Much of this library is now native to JS and will be automatically transpiled

|

||||

- Use jQuery

|

||||

- Much of this library is not native to the DOM.

|

||||

- We are planning to remove it

|

||||

|

||||

## Testing

|

||||

|

||||

⛔️ DO NOT

|

||||

|

||||

- Decrease test coverage

|

||||

- Unit/Functional test coverage is checked as part of the CI process

|

||||

|

||||

### Unit Tests

|

||||

|

||||

✅ DO

|

||||

|

||||

- Write unit tests for non-UI and utility code.

|

||||

- Write your tests using [Jest](https://jestjs.io/)

|

||||

|

||||

☑️ YOU SHOULD

|

||||

|

||||

- Abstract non-UI and utility code so it can run either the NodeJS or Browser environment

|

||||

|

||||

### Functional(Component) Tests

|

||||

|

||||

✅ DO

|

||||

|

||||

- Write tests for UI components

|

||||

- Write your tests using [Jest](https://jestjs.io/)

|

||||

- Use either Enzyme or React Testing Library to perform component tests.

|

||||

|

||||

### Mocking

|

||||

|

||||

✅ DO

|

||||

|

||||

- Use Jest's built-in mocking helpers

|

||||

|

||||

☑️ YOU SHOULD

|

||||

|

||||

- Write code that does not require mocking

|

||||

- Build components that do not require mocking extremely large or difficult to mock objects (like Explorer.ts). Pass _only_ what you need.

|

||||

|

||||

⛔️ DO NOT

|

||||

|

||||

- Use sinon.js for mocking

|

||||

- Sinon has been deprecated and planned for removal

|

||||

|

||||

### End to End Tests

|

||||

|

||||

✅ DO

|

||||

|

||||

- Use [Puppeteer](https://developers.google.com/web/tools/puppeteer) and [Jest](https://jestjs.io/)

|

||||

- Write or modify an existing E2E test that covers the primary use case of any major feature.

|

||||

- Use caution. Do not try to cover every case. End to End tests can be slow and brittle.

|

||||

|

||||

☑️ YOU SHOULD

|

||||

|

||||

- Write tests that use accessible attributes to perform actions. Role, Title, Label, etc

|

||||

- More information https://testing-library.com/docs/queries/about#priority

|

||||

|

||||

⚠️ YOU SHOULD NOT

|

||||

|

||||

- Add test specfic `data-*` attributes to dom elements

|

||||

- This is a common current practice, but one we would like to avoid in the future

|

||||

- End to end tests need to use semantic HTML and accesible attributes to be truely end to end

|

||||

- No user or screen reader actually navigates an app using `data-*` attributes

|

||||

- Add arbitrary time delays to wait for page to render or element to be ready.

|

||||

- All the time delays add up and slow down testing.

|

||||

- Prefer using the framework's "wait for..." functionality.

|

||||

|

||||

### Migrating Knockout to React

|

||||

|

||||

✅ DO

|

||||

|

||||

- Consult other team members before beginning migration work. There is a significant amount of flux in patterns we are using and it is important we do not propagate incorrect patterns.

|

||||

- Start by converting HTML to JSX: https://magic.reactjs.net/htmltojsx.htm. Add functionality as a second step.

|

||||

|

||||

☑️ YOU SHOULD

|

||||

|

||||

- Write React components that require no dependency on Knockout or observables to trigger rendering.

|

||||

|

||||

## Browser Support

|

||||

|

||||

✅ DO

|

||||

|

||||

- Support all [browsers supported by the Azure Portal](https://docs.microsoft.com/en-us/azure/azure-portal/azure-portal-supported-browsers-devices)

|

||||

- Support IE11

|

||||

- In practice, this should not need to be considered as part of a normal development workflow

|

||||

- Polyfills and transpilation are already provided by our engineering systems.

|

||||

- This requirement will be removed on March 30th, 2021 when Azure drops IE11 support.

|

||||

@@ -1,6 +1,6 @@

|

||||

# Contribution guidelines to Data Explorer

|

||||

|

||||

This project welcomes contributions and suggestions. Most contributions require you to agree to a

|

||||

This project welcomes contributions and suggestions. Most contributions require you to agree to a

|

||||

Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us

|

||||

the rights to use your contribution. For details, visit https://cla.microsoft.com.

|

||||

|

||||

@@ -13,6 +13,7 @@ For more information see the [Code of Conduct FAQ](https://opensource.microsoft.

|

||||

contact [opencode@microsoft.com](mailto:opencode@microsoft.com) with any additional questions or comments.

|

||||

|

||||

## Microsoft Open Source Code of Conduct

|

||||

|

||||

This project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/).

|

||||

|

||||

Resources:

|

||||

@@ -20,33 +21,3 @@ Resources:

|

||||

- [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/)

|

||||

- [Microsoft Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/)

|

||||

- Contact [opencode@microsoft.com](mailto:opencode@microsoft.com) with questions or concerns

|

||||

|

||||

## Browser support

|

||||

Please make sure to support all modern browsers as well as Internet Explorer 11.

|

||||

For IE support, polyfill is preferred over new usage of lodash or underscore. We already polyfill almost everything by importing babel-polyfill at the top of entry points.

|

||||

|

||||

|

||||

## Coding guidelines, conventions and recommendations

|

||||

### Typescript

|

||||

* Follow this [typescript style guide](https://github.com/excelmicro/typescript) which is based on [airbnb's style guide](https://github.com/airbnb/javascript).

|

||||

* Conventions speficic to this project:

|

||||

- Use double-quotes for string

|

||||

- Don't use `null`, use `undefined`

|

||||

- Pascal case for private static readonly fields

|

||||

- Camel case for classnames in markup

|

||||

* Don't use class unless necessary

|

||||

* Code related to notebooks should be dynamically imported so that it is loaded from a separate bundle only if the account is notebook-enabled. There are already top-level notebook components which are dynamically imported and their dependencies can be statically imported from these files.

|

||||

* Prefer using [Fluent UI controls](https://developer.microsoft.com/en-us/fluentui#/controls/web) over creating your own, in order to maintain consistency and support a11y.

|

||||

|

||||

### React

|

||||

* Prefer using React class components over function components and hooks unless you have a simple component and require no nested functions:

|

||||

* Nested functions may be harder to test independently

|

||||

* Switching from function component to class component later mayb be painful

|

||||

|

||||

## Testing

|

||||

Any PR should not decrease testing coverage.

|

||||

|

||||

## Recommended Tools and VS Code extensions

|

||||

* [Bookmarks](https://github.com/alefragnani/vscode-bookmarks)

|

||||

* [Bracket pair colorizer](https://github.com/CoenraadS/Bracket-Pair-Colorizer-2)

|

||||

* [GitHub Pull Requests and Issues](https://github.com/Microsoft/vscode-pull-request-github)

|

||||

53

README.md

53

README.md

@@ -13,29 +13,18 @@ UI for Azure Cosmos DB. Powers the [Azure Portal](https://portal.azure.com/), ht

|

||||

|

||||

### Watch mode

|

||||

|

||||

Run `npm run watch` to start the development server and automatically rebuild on changes

|

||||

Run `npm start` to start the development server and automatically rebuild on changes

|

||||

|

||||

### Specifying Development Platform

|

||||

### Hosted Development (https://cosmos.azure.com)

|

||||

|

||||

Setting the environment variable `PLATFORM` during the build process will force the explorer to load the specified platform. By default in development it will run in `Hosted` mode. Valid options:

|

||||

|

||||

- Hosted

|

||||

- Emulator

|

||||

- Portal

|

||||

|

||||

`PLATFORM=Emulator npm run watch`

|

||||

|

||||

### Hosted Development

|

||||

|

||||

The default webpack dev server configuration will proxy requests to the production portal backend: `https://main.documentdb.ext.azure.com`. This will allow you to use production connection strings on your local machine.

|

||||

|

||||

To run pure hosted mode, in `webpack.config.js` change index HtmlWebpackPlugin to use hostedExplorer.html and change entry for index to use HostedExplorer.ts.

|

||||

- Visit: `https://localhost:1234/hostedExplorer.html`

|

||||

- Local sign in via AAD will NOT work. Connection string only in dev mode. Use the Portal if you need AAD auth.

|

||||

- The default webpack dev server configuration will proxy requests to the production portal backend: `https://main.documentdb.ext.azure.com`. This will allow you to use production connection strings on your local machine.

|

||||

|

||||

### Emulator Development

|

||||

|

||||

In a window environment, running `npm run build` will automatically copy the built files from `/dist` over to the default emulator install paths. In a non-windows environment you can specify an alternate endpoint using `EMULATOR_ENDPOINT` and webpack dev server will proxy requests for you.

|

||||

|

||||

`PLATFORM=Emulator EMULATOR_ENDPOINT=https://my-vm.azure.com:8081 npm run watch`

|

||||

- Start the Cosmos Emulator

|

||||

- Visit: https://localhost:1234/index.html

|

||||

|

||||

#### Setting up a Remote Emulator

|

||||

|

||||

@@ -55,16 +44,8 @@ The Cosmos emulator currently only runs in Windows environments. You can still d

|

||||

|

||||

### Portal Development

|

||||

|

||||

The Cosmos Portal that consumes this repo is not currently open source. If you have access to this project, `npm run build` will copy the built files over to the portal where they will be loaded by the portal development environment

|

||||

|

||||

You can however load a local running instance of data explorer in the production portal.

|

||||

|

||||

1. Turn off browser SSL validation for localhost: chrome://flags/#allow-insecure-localhost OR Install valid SSL certs for localhost (on IE, follow these [instructions](https://www.technipages.com/ie-bypass-problem-with-this-websites-security-certificate) to install the localhost certificate in the right place)

|

||||

2. Allowlist `https://localhost:1234` domain for CORS in the Azure Cosmos DB portal

|

||||

3. Start the project in portal mode: `PLATFORM=Portal npm run watch`

|

||||

4. Load the portal using the following link: https://ms.portal.azure.com/?dataExplorerSource=https%3A%2F%2Flocalhost%3A1234%2Fexplorer.html

|

||||

|

||||

Live reload will occur, but data explorer will not properly integrate again with the parent iframe. You will have to manually reload the page.

|

||||

- Visit: https://ms.portal.azure.com/?dataExplorerSource=https%3A%2F%2Flocalhost%3A1234%2Fexplorer.html

|

||||

- You may have to manually visit https://localhost:1234/explorer.html first and click through any SSL certificate warnings

|

||||

|

||||

### Testing

|

||||

|

||||

@@ -76,17 +57,7 @@ Unit tests are located adjacent to the code under test and run with [Jest](https

|

||||

|

||||

#### End to End CI Tests

|

||||

|

||||

[Cypress](https://www.cypress.io/) is used for end to end tests and are contained in `cypress/`. Currently, it operates as sub project with its own typescript config and dependencies. It also only operates against the emulator. To run cypress tests:

|

||||

|

||||

1. Ensure the emulator is running

|

||||

2. Start cosmos explorer in emulator mode: `PLATFORM=Emulator npm run watch`

|

||||

3. Move into `cypress/` folder: `cd cypress`

|

||||

4. Install dependencies: `npm install`

|

||||

5. Run cypress headless(`npm run test`) or in interactive mode(`npm run test:debug`)

|

||||

|

||||

#### End to End Production Tests

|

||||

|

||||

Jest and Puppeteer are used for end to end production runners and are contained in `test/`. To run these tests locally:

|

||||

Jest and Puppeteer are used for end to end browser based tests and are contained in `test/`. To run these tests locally:

|

||||

|

||||

1. Copy .env.example to .env

|

||||

2. Update the values in .env including your local data explorer endpoint (ask a teammate/codeowner for help with .env values)

|

||||

@@ -98,6 +69,10 @@ Jest and Puppeteer are used for end to end production runners and are contained

|

||||

|

||||

We generally adhere to the release strategy [documented by the Azure SDK Guidelines](https://azure.github.io/azure-sdk/policies_repobranching.html#release-branches). Most releases should happen from the master branch. If master contains commits that cannot be released, you may create a release from a `release/` or `hotfix/` branch. See linked documentation for more details.

|

||||

|

||||

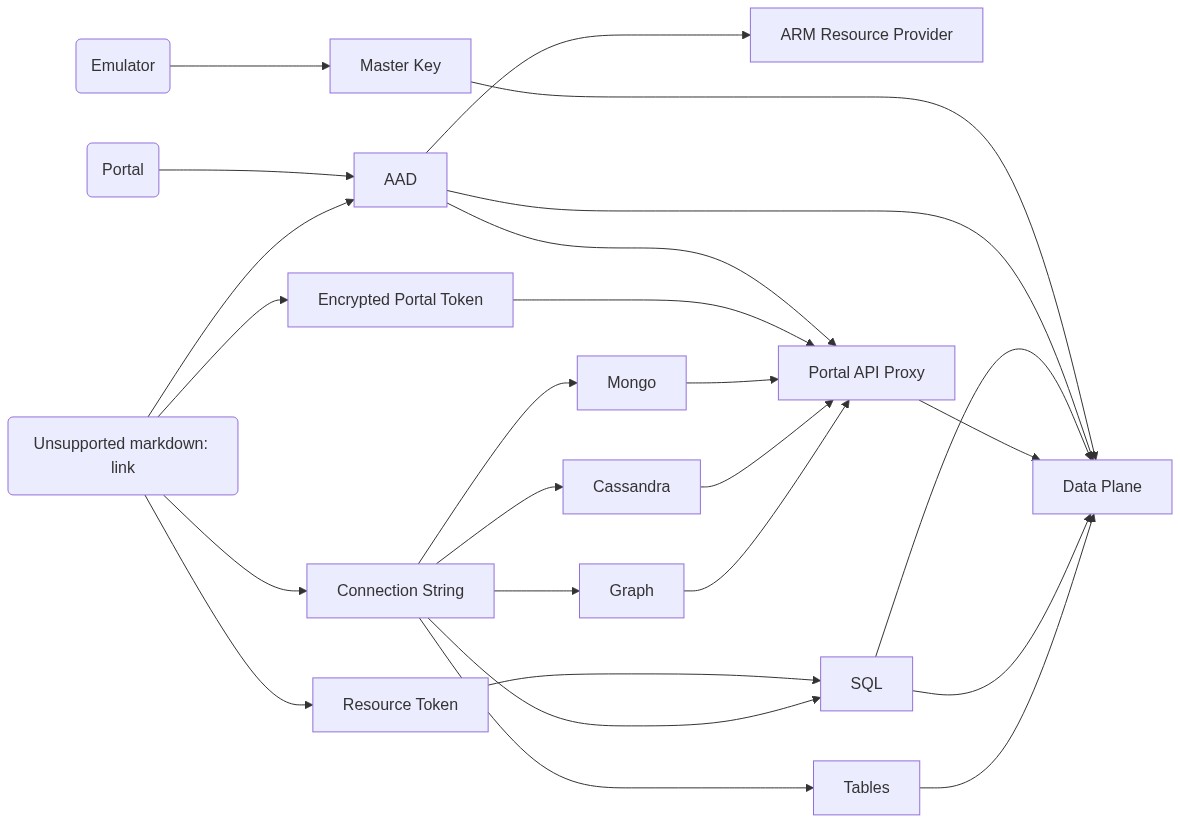

### Architechture

|

||||

|

||||

[](https://mermaid-js.github.io/mermaid-live-editor/#/edit/eyJjb2RlIjoiZ3JhcGggTFJcbiAgaG9zdGVkKGh0dHBzOi8vY29zbW9zLmF6dXJlLmNvbSlcbiAgcG9ydGFsKFBvcnRhbClcbiAgZW11bGF0b3IoRW11bGF0b3IpXG4gIGFhZFtBQURdXG4gIHJlc291cmNlVG9rZW5bUmVzb3VyY2UgVG9rZW5dXG4gIGNvbm5lY3Rpb25TdHJpbmdbQ29ubmVjdGlvbiBTdHJpbmddXG4gIHBvcnRhbFRva2VuW0VuY3J5cHRlZCBQb3J0YWwgVG9rZW5dXG4gIG1hc3RlcktleVtNYXN0ZXIgS2V5XVxuICBhcm1bQVJNIFJlc291cmNlIFByb3ZpZGVyXVxuICBkYXRhcGxhbmVbRGF0YSBQbGFuZV1cbiAgcHJveHlbUG9ydGFsIEFQSSBQcm94eV1cbiAgc3FsW1NRTF1cbiAgbW9uZ29bTW9uZ29dXG4gIHRhYmxlc1tUYWJsZXNdXG4gIGNhc3NhbmRyYVtDYXNzYW5kcmFdXG4gIGdyYWZbR3JhcGhdXG5cblxuICBlbXVsYXRvciAtLT4gbWFzdGVyS2V5IC0tLS0-IGRhdGFwbGFuZVxuICBwb3J0YWwgLS0-IGFhZFxuICBob3N0ZWQgLS0-IHBvcnRhbFRva2VuICYgcmVzb3VyY2VUb2tlbiAmIGNvbm5lY3Rpb25TdHJpbmcgJiBhYWRcbiAgYWFkIC0tLT4gYXJtXG4gIGFhZCAtLS0-IGRhdGFwbGFuZVxuICBhYWQgLS0tPiBwcm94eVxuICByZXNvdXJjZVRva2VuIC0tLT4gc3FsIC0tPiBkYXRhcGxhbmVcbiAgcG9ydGFsVG9rZW4gLS0tPiBwcm94eVxuICBwcm94eSAtLT4gZGF0YXBsYW5lXG4gIGNvbm5lY3Rpb25TdHJpbmcgLS0-IHNxbCAmIG1vbmdvICYgY2Fzc2FuZHJhICYgZ3JhZiAmIHRhYmxlc1xuICBzcWwgLS0-IGRhdGFwbGFuZVxuICB0YWJsZXMgLS0-IGRhdGFwbGFuZVxuICBtb25nbyAtLT4gcHJveHlcbiAgY2Fzc2FuZHJhIC0tPiBwcm94eVxuICBncmFmIC0tPiBwcm94eVxuXG5cdFx0IiwibWVybWFpZCI6eyJ0aGVtZSI6ImRlZmF1bHQifSwidXBkYXRlRWRpdG9yIjpmYWxzZX0)

|

||||

|

||||

# Contributing

|

||||

|

||||

Please read the [contribution guidelines](./CONTRIBUTING.md).

|

||||

|

||||

@@ -1,3 +1,4 @@

|

||||

module.exports = {

|

||||

presets: [["@babel/preset-env", { targets: { node: "current" } }], "@babel/preset-react", "@babel/preset-typescript"]

|

||||

presets: [["@babel/preset-env", { targets: { node: "current" } }], "@babel/preset-react", "@babel/preset-typescript"],

|

||||

plugins: [["@babel/plugin-proposal-decorators", { legacy: true }]],

|

||||

};

|

||||

|

||||

7

canvas/README.md

Normal file

7

canvas/README.md

Normal file

@@ -0,0 +1,7 @@

|

||||

# Why?

|

||||

|

||||

This adds a mock module for `canvas`. Nteract has a ignored require and undeclared dependency on this module. `cavnas` is a server side node module and is not used in browser side code for nteract.

|

||||

|

||||

Installing it locally (`npm install canvas`) will resolve the problem, but it is a native module so it is flaky depending on the system, node version, processor arch, etc. This module provides a simpler, more robust solution.

|

||||

|

||||

Remove this workaround if [this bug](https://github.com/nteract/any-vega/issues/2) ever gets resolved

|

||||

1

canvas/index.js

Normal file

1

canvas/index.js

Normal file

@@ -0,0 +1 @@

|

||||

module.exports = {}

|

||||

11

canvas/package.json

Normal file

11

canvas/package.json

Normal file

@@ -0,0 +1,11 @@

|

||||

{

|

||||

"name": "canvas",

|

||||

"version": "1.0.0",

|

||||

"description": "",

|

||||

"main": "index.js",

|

||||

"scripts": {

|

||||

"test": "echo \"Error: no test specified\" && exit 1"

|

||||

},

|

||||

"author": "",

|

||||

"license": "ISC"

|

||||

}

|

||||

4

cypress/.gitignore

vendored

4

cypress/.gitignore

vendored

@@ -1,4 +0,0 @@

|

||||

cypress.env.json

|

||||

cypress/report

|

||||

cypress/screenshots

|

||||

cypress/videos

|

||||

@@ -1,51 +0,0 @@

|

||||

// Cleans up old databases from previous test runs

|

||||

const { CosmosClient } = require("@azure/cosmos");

|

||||

|

||||

// TODO: Add support for other API connection strings

|

||||

const mongoRegex = RegExp("mongodb://.*:(.*)@(.*).mongo.cosmos.azure.com");

|

||||

|

||||

async function cleanup() {

|

||||

const connectionString = process.env.CYPRESS_CONNECTION_STRING;

|

||||

if (!connectionString) {

|

||||

throw new Error("Connection string not provided");

|

||||

}

|

||||

|

||||

let client;

|

||||

switch (true) {

|

||||

case connectionString.includes("mongodb://"): {

|

||||

const [, key, accountName] = connectionString.match(mongoRegex);

|

||||

client = new CosmosClient({

|

||||

key,

|

||||

endpoint: `https://${accountName}.documents.azure.com:443/`

|

||||

});

|

||||

break;

|

||||

}

|

||||

// TODO: Add support for other API connection strings

|

||||

default:

|

||||

client = new CosmosClient(connectionString);

|

||||

break;

|

||||

}

|

||||

|

||||

const response = await client.databases.readAll().fetchAll();

|

||||

return Promise.all(

|

||||

response.resources.map(async db => {

|

||||

const dbTimestamp = new Date(db._ts * 1000);

|

||||

const twentyMinutesAgo = new Date(Date.now() - 1000 * 60 * 20);

|

||||

if (dbTimestamp < twentyMinutesAgo) {

|

||||

await client.database(db.id).delete();

|

||||

console.log(`DELETED: ${db.id} | Timestamp: ${dbTimestamp}`);

|

||||

} else {

|

||||

console.log(`SKIPPED: ${db.id} | Timestamp: ${dbTimestamp}`);

|

||||

}

|

||||

})

|

||||

);

|

||||

}

|

||||

|

||||

cleanup()

|

||||

.then(() => {

|

||||

process.exit(0);

|

||||

})

|

||||

.catch(error => {

|

||||

console.error(error);

|

||||

process.exit(1);

|

||||

});

|

||||

@@ -1,15 +0,0 @@

|

||||

{

|

||||

"integrationFolder": "./integration",

|

||||

"pluginsFile": false,

|

||||

"fixturesFolder": false,

|

||||

"supportFile": "./support/index.js",

|

||||

"defaultCommandTimeout": 90000,

|

||||

"chromeWebSecurity": false,

|

||||

"reporter": "mochawesome",

|

||||

"reporterOptions": {

|

||||

"reportDir": "cypress/report",

|

||||

"json": true,

|

||||

"overwrite": false,

|

||||

"html": false

|

||||

}

|

||||

}

|

||||

@@ -1,66 +0,0 @@

|

||||

// 1. Click on "New Container" on the command bar.

|

||||

// 2. Pane with the title "Add Container" should appear on the right side of the screen

|

||||

// 3. It includes an input box for the database Id.

|

||||

// 4. It includes a checkbox called "Create now".

|

||||

// 5. When the checkbox is marked, enter new database id.

|

||||

// 3. Create a database WITH "Provision throughput" checked.

|

||||

// 4. Enter minimum throughput value of 400.

|

||||

// 5. Enter container id to the container id text box.

|

||||

// 6. Enter partition key to the partition key text box.

|

||||

// 7. Click "OK" to create a new container.

|

||||

// 8. Verify the new container is created along with the database id and should appead in the Data Explorer list in the left side of the screen.

|

||||

|

||||

const connectionString = require("../../../utilities/connectionString");

|

||||

|

||||

let crypt = require("crypto");

|

||||

|

||||

context("Cassandra API Test - createDatabase", () => {

|

||||

beforeEach(() => {

|

||||

connectionString.loginUsingConnectionString(connectionString.constants.cassandra);

|

||||

});

|

||||

|

||||

it("Create a new table in Cassandra API", () => {

|

||||

const keyspaceId = `KeyspaceId${crypt.randomBytes(8).toString("hex")}`;

|

||||

const tableId = `TableId112`;

|

||||

|

||||

cy.get("iframe").then($element => {

|

||||

const $body = $element.contents().find("body");

|

||||

cy.wrap($body)

|

||||

.find('div[class="commandBarContainer"]')

|

||||

.should("be.visible")

|

||||

.find('button[data-test="New Table"]')

|

||||

.should("be.visible")

|

||||

.click();

|

||||

|

||||

cy.wrap($body)

|

||||

.find('div[class="contextual-pane-in"]')

|

||||

.should("be.visible")

|

||||

.find('span[id="containerTitle"]');

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[id="keyspace-id"]')

|

||||

.should("be.visible")

|

||||

.type(keyspaceId);

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[class="textfontclr"]')

|

||||

.type(tableId);

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="databaseThroughputValue"]')

|

||||

.should("have.value", "400");

|

||||

|

||||

cy.wrap($body)

|

||||

.find('data-test="addCollection-createCollection"')

|

||||

.click();

|

||||

|

||||

cy.wait(10000);

|

||||

|

||||

cy.wrap($body)

|

||||

.find('div[data-test="resourceTreeId"]')

|

||||

.should("exist")

|

||||

.find('div[class="treeComponent dataResourceTree"]')

|

||||

.should("contain", tableId);

|

||||

});

|

||||

});

|

||||

});

|

||||

@@ -1,81 +0,0 @@

|

||||

// 1. Click on "New Graph" on the command bar.

|

||||

// 2. Pane with the title "Add Container" should appear on the right side of the screen

|

||||

// 3. It includes an input box for the database Id.

|

||||

// 4. It includes a checkbox called "Create now".

|

||||

// 5. When the checkbox is marked, enter new database id.

|

||||

// 3. Create a database WITH "Provision throughput" checked.

|

||||

// 4. Enter minimum throughput value of 400.

|

||||

// 5. Enter container id to the container id text box.

|

||||

// 6. Enter partition key to the partition key text box.

|

||||

// 7. Click "OK" to create a new container.

|

||||

// 8. Verify the new container is created along with the database id and should appead in the Data Explorer list in the left side of the screen.

|

||||

|

||||

const connectionString = require("../../../utilities/connectionString");

|

||||

|

||||

let crypt = require("crypto");

|

||||

|

||||

context("Graph API Test", () => {

|

||||

beforeEach(() => {

|

||||

connectionString.loginUsingConnectionString(connectionString.constants.graph);

|

||||

});

|

||||

|

||||

it("Create a new graph in Graph API", () => {

|

||||

const dbId = `TestDatabase${crypt.randomBytes(8).toString("hex")}`;

|

||||

const graphId = `TestGraph${crypt.randomBytes(8).toString("hex")}`;

|

||||

const partitionKey = `SharedKey${crypt.randomBytes(8).toString("hex")}`;

|

||||

|

||||

cy.get("iframe").then($element => {

|

||||

const $body = $element.contents().find("body");

|

||||

cy.wrap($body)

|

||||

.find('div[class="commandBarContainer"]')

|

||||

.should("be.visible")

|

||||

.find('button[data-test="New Graph"]')

|

||||

.should("be.visible")

|

||||

.click();

|

||||

|

||||

cy.wrap($body)

|

||||

.find('div[class="contextual-pane-in"]')

|

||||

.should("be.visible")

|

||||

.find('span[id="containerTitle"]');

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollection-createNewDatabase"]')

|

||||

.check();

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollection-newDatabaseId"]')

|

||||

.should("be.visible")

|

||||

.type(dbId);

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollectionPane-databaseSharedThroughput"]')

|

||||

.check();

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="databaseThroughputValue"]')

|

||||

.should("have.value", "400");

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollection-collectionId"]')

|

||||

.type(graphId);

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollection-partitionKeyValue"]')

|

||||

.type(partitionKey);

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollection-createCollection"]')

|

||||

.click();

|

||||

|

||||

cy.wait(10000);

|

||||

|

||||

cy.wrap($body)

|

||||

.find('div[data-test="resourceTreeId"]')

|

||||

.should("exist")

|

||||

.find('div[class="treeComponent dataResourceTree"]')

|

||||

.should("contain", dbId)

|

||||

.click()

|

||||

.should("contain", graphId);

|

||||

});

|

||||

});

|

||||

});

|

||||

@@ -1,80 +0,0 @@

|

||||

// 1. Click on "New Container" on the command bar.

|

||||

// 2. Pane with the title "Add Container" should appear on the right side of the screen

|

||||

// 3. It includes an input box for the database Id.

|

||||

// 4. It includes a checkbox called "Create now".

|

||||

// 5. When the checkbox is marked, enter new database id.

|

||||

// 3. Create a database WITH "Provision throughput" checked.

|

||||

// 4. Enter minimum throughput value of 400.

|

||||

// 5. Enter container id to the container id text box.

|

||||

// 6. Enter partition key to the partition key text box.

|

||||

// 7. Click "OK" to create a new container.

|

||||

// // 8. Verify the new container is created along with the database id and should appead in the Data Explorer list in the left side of the screen.

|

||||

|

||||

const connectionString = require("../../../utilities/connectionString");

|

||||

|

||||

let crypt = require("crypto");

|

||||

|

||||

context("Mongo API Test - createDatabase", () => {

|

||||

beforeEach(() => {

|

||||

connectionString.loginUsingConnectionString();

|

||||

});

|

||||

|

||||

it("Create a new collection in Mongo API", () => {

|

||||

const dbId = `TestDatabase${crypt.randomBytes(8).toString("hex")}`;

|

||||

const collectionId = `TestCollection${crypt.randomBytes(8).toString("hex")}`;

|

||||

const sharedKey = `SharedKey${crypt.randomBytes(8).toString("hex")}`;

|

||||

|

||||

cy.get("iframe").then($element => {

|

||||

const $body = $element.contents().find("body");

|

||||

cy.wrap($body)

|

||||

.find('div[class="commandBarContainer"]')

|

||||

.should("be.visible")

|

||||

.find('button[data-test="New Collection"]')

|

||||

.should("be.visible")

|

||||

.click();

|

||||

|

||||

cy.wrap($body)

|

||||

.find('div[class="contextual-pane-in"]')

|

||||

.should("be.visible")

|

||||

.find('span[id="containerTitle"]');

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollection-createNewDatabase"]')

|

||||

.check();

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollection-newDatabaseId"]')

|

||||

.type(dbId);

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollectionPane-databaseSharedThroughput"]')

|

||||

.check();

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollection-collectionId"]')

|

||||

.type(collectionId);

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="databaseThroughputValue"]')

|

||||

.should("have.value", "400");

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollection-partitionKeyValue"]')

|

||||

.type(sharedKey);

|

||||

|

||||

cy.wrap($body)

|

||||

.find("#submitBtnAddCollection")

|

||||

.click();

|

||||

|

||||

cy.wait(10000);

|

||||

|

||||

cy.wrap($body)

|

||||

.find('div[data-test="resourceTreeId"]')

|

||||

.should("exist")

|

||||

.find('div[class="treeComponent dataResourceTree"]')

|

||||

.should("contain", dbId)

|

||||

.click()

|

||||

.should("contain", collectionId);

|

||||

});

|

||||

});

|

||||

});

|

||||

@@ -1,96 +0,0 @@

|

||||

// 1. Click on "New Container" on the command bar.

|

||||

// 2. Pane with the title "Add Container" should appear on the right side of the screen

|

||||

// 3. It includes an input box for the database Id.

|

||||

// 4. It includes a checkbox called "Create now".

|

||||

// 5. When the checkbox is marked, enter new database id.

|

||||

// 3. Create a database WITH "Provision throughput" checked.

|

||||

// 4. Enter minimum throughput value of 400.

|

||||

// 5. Enter container id to the container id text box.

|

||||

// 6. Enter partition key to the partition key text box.

|

||||

// 7. Click "OK" to create a new container.

|

||||

// 8. Verify the new container is created along with the database id and should appead in the Data Explorer list in the left side of the screen.

|

||||

|

||||

const connectionString = require("../../../utilities/connectionString");

|

||||

|

||||

let crypt = require("crypto");

|

||||

|

||||

context("Mongo API Test", () => {

|

||||

beforeEach(() => {

|

||||

connectionString.loginUsingConnectionString();

|

||||

});

|

||||

|

||||

it.skip("Create a new collection in Mongo API - Autopilot", () => {

|

||||

const dbId = `TestDatabase${crypt.randomBytes(8).toString("hex")}`;

|

||||

const collectionId = `TestCollection${crypt.randomBytes(8).toString("hex")}`;

|

||||

const sharedKey = `SharedKey${crypt.randomBytes(8).toString("hex")}`;

|

||||

|

||||

cy.get("iframe").then($element => {

|

||||

const $body = $element.contents().find("body");

|

||||

cy.wrap($body)

|

||||

.find('div[class="commandBarContainer"]')

|

||||

.should("be.visible")

|

||||

.find('button[data-test="New Collection"]')

|

||||

.should("be.visible")

|

||||

.click();

|

||||

|

||||

cy.wrap($body)

|

||||

.find('div[class="contextual-pane-in"]')

|

||||

.should("be.visible")

|

||||

.find('span[id="containerTitle"]');

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollection-createNewDatabase"]')

|

||||

.check();

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollection-newDatabaseId"]')

|

||||

.type(dbId);

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollectionPane-databaseSharedThroughput"]')

|

||||

.check();

|

||||

|

||||

cy.wrap($body)

|

||||

.find('div[class="throughputModeContainer"]')

|

||||

.should("be.visible")

|

||||

.and(input => {

|

||||

expect(input.get(0).textContent, "first item").contains("Autopilot (preview)");

|

||||

expect(input.get(1).textContent, "second item").contains("Manual");

|

||||

});

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[id="newContainer-databaseThroughput-autoPilotRadio"]')

|

||||

.check();

|

||||

|

||||

cy.wrap($body)

|

||||

.find('select[name="autoPilotTiers"]')

|

||||

// .eq(1).should('contain', '4,000 RU/s');

|

||||

// // .select('4,000 RU/s').should('have.value', '1');

|

||||

|

||||

.find('option[value="2"]')

|

||||

.then($element => $element.get(1).setAttribute("selected", "selected"));

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollection-collectionId"]')

|

||||

.type(collectionId);

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollection-partitionKeyValue"]')

|

||||

.type(sharedKey);

|

||||

|

||||

cy.wrap($body)

|

||||

.find('input[data-test="addCollection-createCollection"]')

|

||||

.click();

|

||||

|

||||

cy.wait(10000);

|

||||

|

||||

cy.wrap($body)

|

||||

.find('div[data-test="resourceTreeId"]')

|

||||

.should("exist")

|

||||

.find('div[class="treeComponent dataResourceTree"]')

|

||||

.should("contain", dbId)

|

||||

.click()

|

||||

.should("contain", collectionId);

|

||||

});

|

||||

});

|

||||

});

|

||||

@@ -1,67 +0,0 @@

|

||||

const connectionString = require("../../../utilities/connectionString");

|

||||

|

||||

let crypt = require("crypto");

|

||||

|

||||

context("Mongo API Test", () => {

|

||||

beforeEach(() => {

|

||||

connectionString.loginUsingConnectionString();

|

||||

});

|

||||